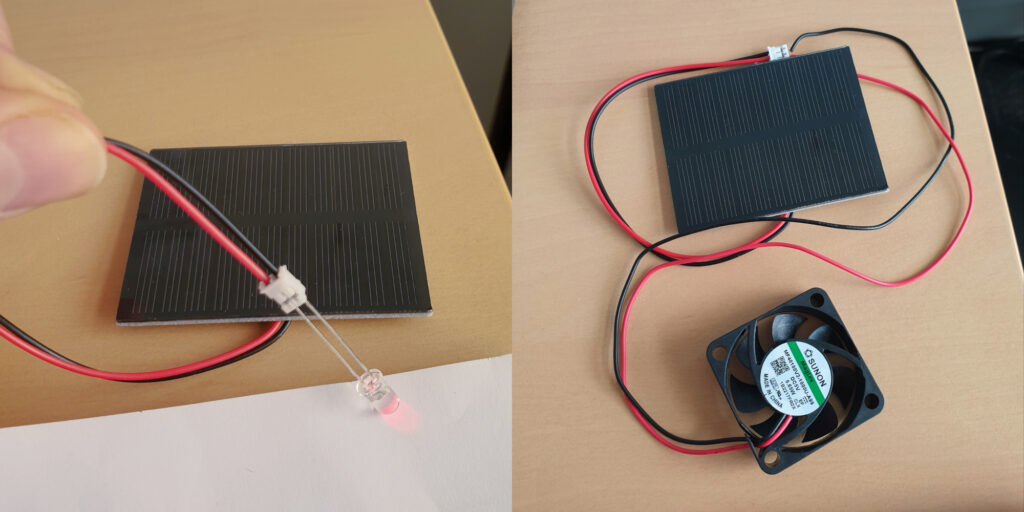

[In French] I've uploaded the documents I used last month for a one-hour lecture at a primary schools (7-8 year old children), with a theoretical part on electrical energy conversion and a pratical part with small PV panels.

Pierre Haessig – personal website

[In French] I've uploaded the documents I used last month for a one-hour lecture at a primary schools (7-8 year old children), with a theoretical part on electrical energy conversion and a pratical part with small PV panels.

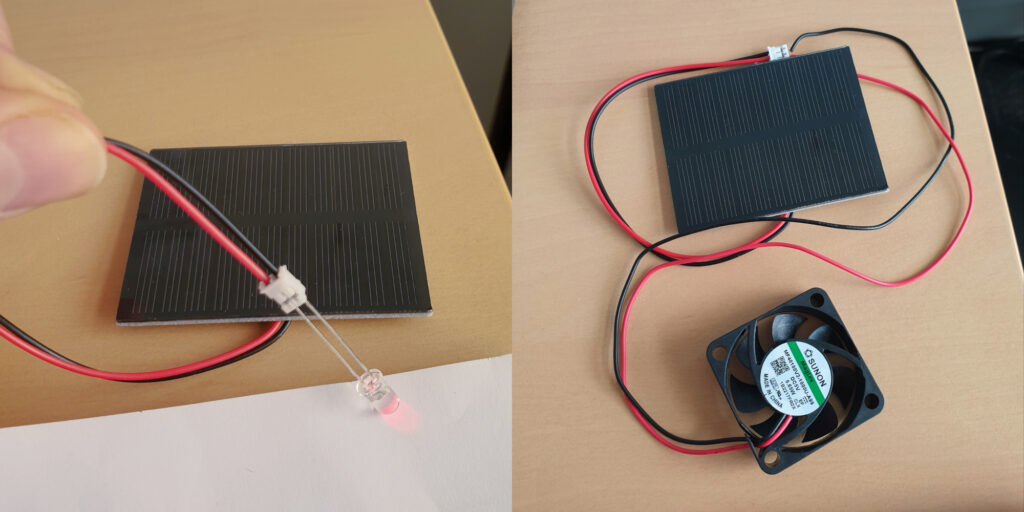

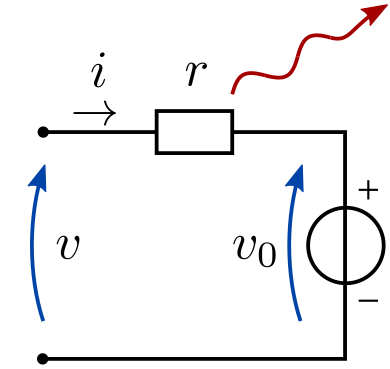

This post is meant to help people in search of a refurbished notebook. Indeed, it’s easy to get lost in the processors references (“how does an Intel Core i5-8250U compares to an i5-10210U?” Spoiler: they are almost the same!). It is the outcome of me researching whether it is worth replacing my Lenovo Thinkpad T470 notebook, a 2017 model bought 2nd hand in 2020. While the conclusion is that I’ll probably wait a bit more, this was a nice data research about the evolution of the computing power of office notebooks over the last decade.

In this post, I focus on key results while the full dataset and associated Python code is available in the GitHub repository https://github.com/pierre-haessig/notebook-cpu-performance/. Also, there is a CPUs table, with characteristics and performance scores hosted on Baserow. Testing this so-called “no-code” cloud database was an additional excuse for this project... While I don’t want to comment my experience here, I’ll just say I found it a neat alternative to spreadsheets for doing database-style work (e.g. creating links between tables).

About the scores: I’ve extracted computing performance scores from the Geekbench 6 data browser https://browser.geekbench.com/ which conveniently make their database public (in exchange of Geekbench users automatically contributing to the database for free). This test provides two scores:

Geekbench explains that these scores are proportional to the processing speed, so a score which is twice as large means a processor that is twice as fast, that is a computing task completed in half the time, if I got it right.

About the processors selection: I’ve extracted the scores for CPUs found in typical refurbished office notebooks, that is midrange & low power CPUs, aka Intel Core i5 processors, from the so-called U series, but also corresponding AMD Ryzen 5. The earliest model is the Intel Core i5-5200U launched in 2015, while the most recent is the Intel Core Ultra 125U/135U from late 2023 (there wasn’t enough data for the 2024 Intel Core Ultra 2xx models). Notice that there are no refurbished notebooks of 2023 yet on the market, so there is some amount of guessing on what will be the "typical office notebook CPUs" of 2023-2025.

Enough speaking, here are two graphs of processor performance.

First a dot plot/boxplot chart showing CPU performance, ranked by Single-Core performance (which is close, but not quite, the same as the Multi-Core rank):

The horizontal scale for the score is logarithmic, so that each vertical grid line means a difference by a factor 2. This allows superimposing Single-Core (blue) and Multi-Core (green) data.

The diamonds are the median scores, while the lines show the variability. Indeed, there is no unique value for each CPU, due to the many factors which can affect computing performance: temperature, power source (battery vs. AC adapter) and energy saving policy (max performance vs. max battery life). To account for this, I’ve collected about 1000 samples for each CPU and summarized them by computing quantiles. The thick lines show the quantiles 25%–75% (also called interquartile range, which contains half the samples) while the thin line ended by mustaches shows the quantiles 10%–90% (the most extreme deciles which contain 80% of the samples).

Notice that to avoid data overcrowding, this the “short” version of the chart where I picked one CPU model per generation, while there are typically two (like i5-1135 and i5-1145, with very close performance). The full chart variant (twice as long) can be found in the chart repository, along with the variants sorted by Multi-Core score.

The second chart shows the evolution of CPU performance over time. Only the median score is shown here, to avoid overcrowding the graph, but with all the CPU models of the dataset. This graph is interactive (perhaps a mouse is required, though): hovering each point reveals the processor name and details like the number of cores.

[EDIT: for some unknown reason, the tooltip which is suppose to appear when hovering points has an erratic display (pops up a large blank rectangle). In the mean time, I've put the charts on a dedicated page which works fine]

The dot color and shape discriminate the CPU designer: Intel vs. AMD (the classical term “manufacturer” is not appropriate for AMD, neither for the latest Intel products). The gray dotted line shows the exponential trend, that is a constant-rate growth trends, in the spirit of Moore’s law (doubling every 2 years), albeit with a much smaller rate, see values below.

This second plot was done with Vega-Altair which is very nice for data exploration and interactivity. However, it is perhaps a bit less customizable than my “old & reliable” plotting companion, Matplotlib, used for the first chart. In particular, I didn’t manage to replicate to the nice log2 scale, created by manually setting the ticks position and label (see Retrieve_geekbench.ipynb notebook, “Log2 scale variant”), but could be achieved more cleanly with Matplotlib’s tick formatter. So here is the log2 variant of the performance over time chart, albeit with the raw log2 scores. With this scale, the exponential trend becomes a straight line.

How to read these raw log2 scores: values [0, 1, 2, 3] correspond to [1000, 2000, 4000, 8000], while the general formula is y = 1000 × 2x. Also, because there are grid lines for half scores (0.5→1000×√2 ≈ 1414), scores which are different by factor 2 are here separated by two grid lines instead of just one in the first plot.

What can be said from the charts above?

Sure, there has been some progress in CPU performance over the decade!

→ In both cases, the growth well slower than the 2 years (that is +41%/y) of Moore’s law.

There is a clear effect of diminishing returns within the general trend to add more CPU cores:

The exponential fits gives a false sense of a smooth progress in performance, while the true evolution is a bit more stepwise, with some processors “lagging behind” and others “ahead of their time”:

Up to this point, I’ve only commented the median score, while deferring the discussion about performance variability shown on the first plot.

Et voilà, I guess that’s enough for one post. Of course I only covered computing metrics. For example, when I said in the intro that Intel Core i5-10210U and i5-8250U are almost the same, these processors are still 2 years apart and the corresponding notebooks may come with different Wifi and Bluetooth connection standards, so that the more recent10210U may still be more interesting.

In a following post, I will write on the second part of this dataset: the notebook offers table, which links the system price to its CPU performance, to get Pareto-style trade-off chart of price vs. performance. Notice that this dataset and the draft plotting code are already available in the repository (Offers_plot.ipynb notebook and Baserow Notebook offers table).

This is a test post to see how easy it is to embed Vega-Lite data visualizations to a WordPress page. Oddly enough, I didn't find an all-in-one tutorial for this. If you see an interactive bar chart below (interactive means: bar color reacts to mouse hover), it means this method work!

Integration approach: I'm using the Vega-Embed helper library, since it is described as the “easiest way to use Vega-Lite on your own web page” on the official Vega-Lite doc. In this post, I'm essentially adapting Vega-Embed's README for WordPress. Two steps are necessary:

Adding custom javascript scripts to the <header> section of a WordPress site is extensively covered:

I chose the extension route, with WPCode since it seems the most popular option. In the end, I find it does the job, albeit having perhaps too many features compared to what is needed for this task.

The objective is to inject the the following html code in the site header, which will load Vega, Vega-Lite and Vega-embed:

<!-- Import Vega, Vega-Lite and vega-embed -->

<script src="https://cdn.jsdelivr.net/npm/vega@5"></script>

<script src="https://cdn.jsdelivr.net/npm/vega-lite@5"></script>

<script src="https://cdn.jsdelivr.net/npm/vega-embed@6"></script>with WPCode installed, this requires clicking in the dashboard:

Other than that, all the options below the editor are fine, in particular the Snippet insertion location which defaults to “Site Wide Header”. If you open the source code of this page (Ctrl+U) and see the lines with <script src="https://cdn.jsdelivr.net/npm/vega... (scrolling down to about line 206...), this means the method worked for me!

To avoid importing Vega on every page, I've used the optional “Smart Conditional Logic” of WPCode Snippet editor. There it can be specified that the Snippet should show only on specific pages like this one (in the “Where (page)” panel). In truth, the “Page/Post” condition is restricted to the PRO version, but “Page URL” is available.

Several pages can be specified by “+Add new group”, since groups of rules combine with boolean OR (whereas rules within a group combine with AND).

With this display logic, the Vega import line shouldn't be visible on most pages of this site like Home.

Once Vega libraries are imported, there remains to add to the core of the page the two bits of HTML code which will load the specific visualization we wish to display:

<div>, with a uniquely chosen id, where the visualization will appear<script> tag which will read Vega's JSON visualization description (the “spec” in Vega's word) and load it to the target tag, with a call like vegaEmbed('#vis', spec)Here I'll use again the example of Vega-Embed’s README:

<div id="vis">vega viz will go here</div>

<script type="text/javascript">

var spec = 'https://raw.githubusercontent.com/vega/vega/master/docs/examples/bar-chart.vg.json';

vegaEmbed('#vis', spec)

.then(function (result) {

// Access the Vega view instance (https://vega.github.io/vega/docs/api/view/) as result.view

})

.catch(console.error);

</script>Using the WordPress block editor (Gutenberg editor), I'm adding “Custom HTML” block with that content. In fact, it's possible to split the target tag and the script in two blocks (again if you look at the source code with Ctrl+U, you should see the script just below, while the block tag with id="viz" is above).

Et voilà!

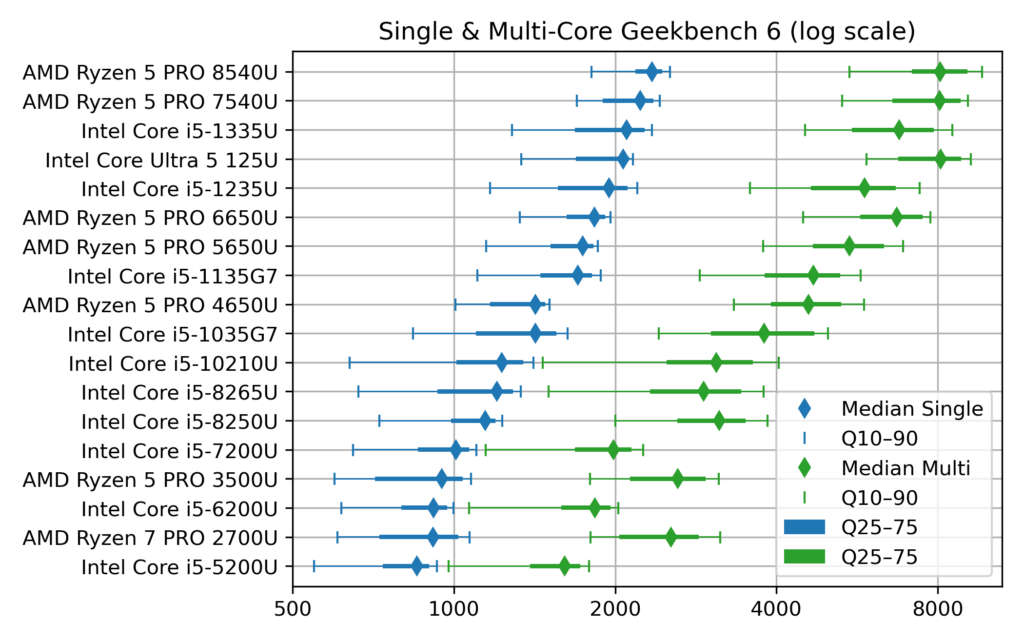

At the VéloMix hackathon at IMT Atlantique in Rennes, I met with Guillaume Le Gall (ESIR, Univ Rennes) and Matthieu Silard (IMT Atlantique) working on battery charging monitoring. We ended up working on the problem of State of Charge (SoC) estimation, that is evaluating the charge level of a battery by monitoring its voltage and current along time (and notably not its open circuit voltage).

I had heard SoC estimation was often performed by the Kalman filter (and in particular with EKF, its extended version), but I had never had the occasion to implement it. Time was too short to get it working on the day of the hackathon, but now I have drafted a Python implementation, or more precisely three implementations:

All these are available in a single Jupyter notebook:

(Post in French, since it’s about a presentation in French...)

Ma présentation « Optimisation des microréseaux » faite à l’École normale supérieure de Rennes pour les Rencontres Mécatroniques est disponible en ligne. À destination des étudiant.e.s en mécatronique, elle se voulait assez pédagogique pour expliquer les enjeux de dimensionnement et gestion d’énergie des systèmes énergétiques.

Beaucoup d’idée développées dans le cadre de la thèse d’Elsy El Sayegh (soutenue mars 2024) avec mon collègue Nabil Sadou et qu'on continue à explorer avec Jean NIKIEMA, nouveau doctorant de l’équipe AUT !

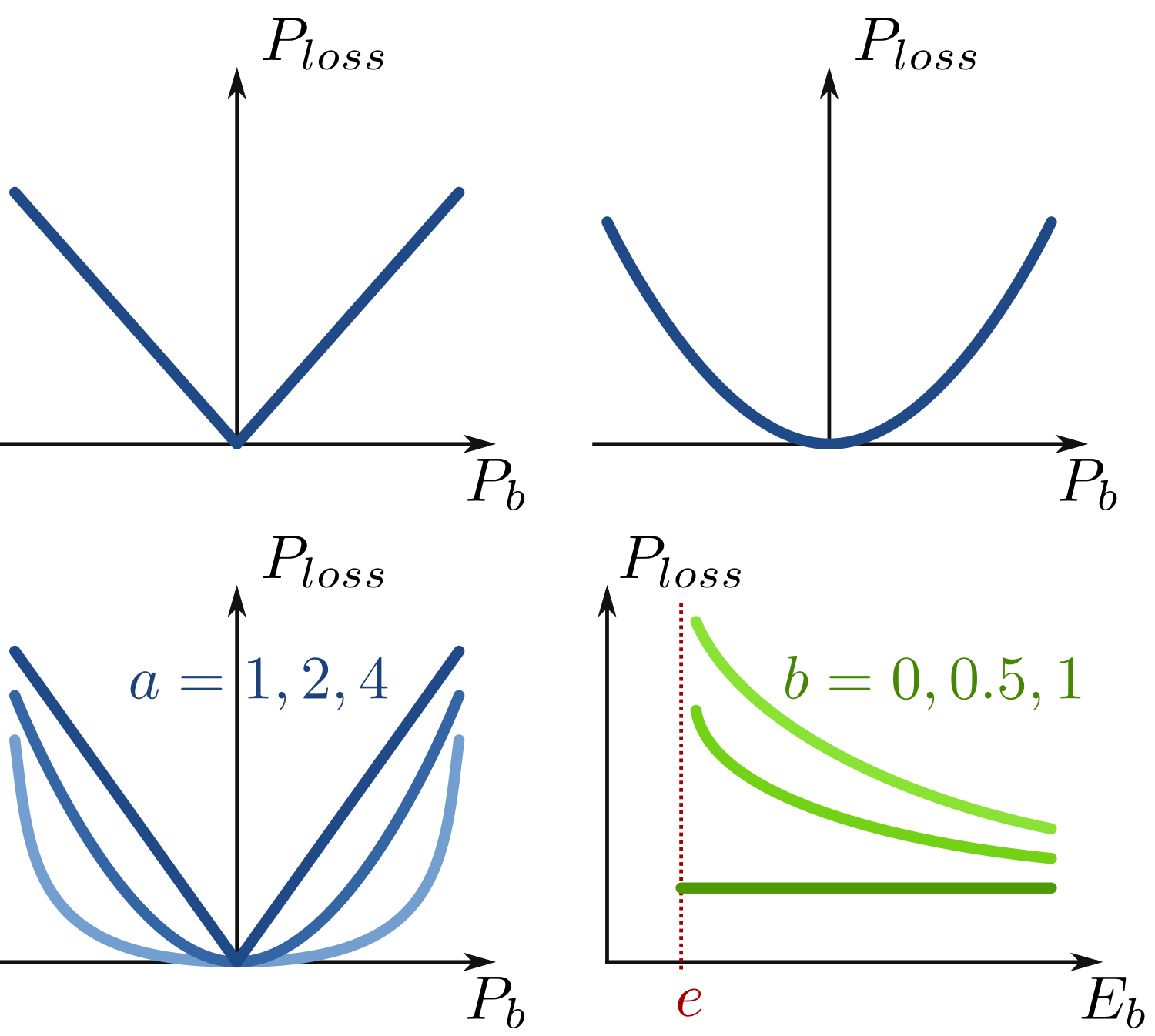

I’ve put online (on HAL) the manuscript I’ve submitted to PowerTech 2021 (⚠ not yet accepted [Edit: it was eventually accepted. Presentation video is available]). It discusses the modeling of energy storage losses. The question is how to model these losses using convex functions, so that the model can be embedded efficiently in optimal energy management problems.

This is possible thanks to a constraint relaxation: losses are assumed to be greater than (≥) their expression, rather than equal (=). With a convex loss expression, the resulting constraint is convex. In applications where energy losses tend to be naturally minimized, this works great. This means that the inequality is tight at the optimum: losses are eventually equal to their expression. Notice that in applications where dissipating energy can be necessary at the optimum, this relaxation can fail: losses are strictly greater than their expression, meaning that there are extraneous losses!

I’ve had this topic in mind for some years now, with early discussion at PowerTech 2015 with Olivier Megel (then at ETH Zurich). Fast-forwarding to spring 2020, I had a nice exchange with Jonathan Dumas and Bertrand Cornélusse (Univ. Liège) which provided me with related references in the field of power systems.

After some experiments at the end of the last academic year, I’ve assembled these ideas during the autumn (conference deadline effect), realizing that, as often, there was quite a large amount of literature on the topic. Papers that I would have stayed unaware of, if not writing the introduction of this paper! Still, I found the subject was not exhausted (also a common pattern) so that I could position my ideas.

I believe that the contribution is:

The typical effect being covered by the loss model I propose is when power losses (proportional to the squared power) varies with the state of charge (typically at the very end of charge or discharge). In short, it unites and generalizes classical loss models, while preserving convexity.

I don’t think this will revolutionize the field, but hopefully it can help people in energy management using physically more realistic battery models, while still keeping the computational efficiency of convex optimization.

More than a year after the start of its development, I’m now pushing the “final” version of my tiny web app “Electric vs Thermal vehicle calculator, with uncertainty”. It allows comparing the ecological merit of electric versus thermal vehicles (greenhouse gas emissions only) on a lifecycle basis (manufacturing and usage stages).

Compared to alternatives (featuring nicer designs), this app enables changing the value all the inputs to watch their influence, thus the name “calculator”. Of course, a nice set of presets is provided. Also, it is perhaps the only comparator of this kind to handle the uncertainty of all these inputs: it uses input ranges (lower and upper bounds) instead of just nominal values. Therefore, one can check how robust is the conclusion that electric vehicles are better than thermal counterparts (spoiler: it is, unless using highly pessimistic, if not biased, inputs).

I started this app in spring 2019, after I came across the blog post “Electric car: 697,612 km to become green! True or false?” by Damien Ernst (Université de Liège). At that time, I was not familiar with the lifecycle analysis of electric vehicles (EVs) and the article seemed at first pretty convincing. I was surprised to discover the sometimes violent feedback Pr. Ernst received. Fortunately, I also found more constructive responses, e.g. from Auke Hoekstra (Zenmo, TU Eindhoven), which I soon realized to be a serial myth buster on the question of EV vs thermal vehicles(*).

I quickly realized that the key question for making this comparison is the choice of input data (greenhouse gas emissions for battery manufacturing, energy consumption of electric and thermal vehicles …). Indeed, with a given set of inputs, the computation of the “distance to CO₂ parity” is simple arithmetic. I created a calculator app which implements this computation. This enables playing with the value of each input to see its effect on the final question: “Are EVs better than thermal vehicles?”.

While discussing Ernst’s post with colleagues, I got feedback that perhaps the first big issue with his first claim “697,612 km to become green” is that conveys a false sense of extreme precision. Indeed, high uncertainty around some key inputs prevents giving so many significant digits. And indeed, it is because several, if not all, inputs are open to discussion (fuel consumption: real drive or standard tests, electricity mix for EV charging: 100% coal or French nuclear power…?) that there are all these clashing newspaper articles and Twitter discussions piling up!

When I started the app, I thought I would save the time to research for good sets of inputs, since it is a calculator where the user can change all the inputs to his/her choice. This was a short illusion because I soon realized that the app would be much better with a set of reasonable choices (presets), so that I needed to do the job anyway. This is in fact pretty time consuming, since there is a need for serious research for almost each input. Now, I don't consider this work finished (it cannot!), but I think I've spent a fair attention and found reliable sources (e.g. meta-analyses) to each input. My choices are documented at the end of the presentation page.

In this process, I've learned a lot, and the main meta knowledge I take away is that inputs need to be fresh. Indeed, like in the field of renewables, the main parameters of EV batteries are changing fast (increasing size, decreasing per kWh manufacturing emissions…) and so is the European power sector (e.g. the emissions per kWh for EV charging).

(*) Auke Hoekstra was perhaps irritated to endlessly repeat his arguments everywhere, so he collected his key methodological assumptions in a journal paper “The Underestimated Potential of Battery Electric Vehicles to Reduce Emissions”, Joule, 2019, DOI: 10.1016/j.joule.2019.06.002 (with an August 2020 update written for the green party Bundestag representatives).

À cause (ou grâce) au confinement, j’ai enseigné à distance mon cours d’électrotechnique « Énergie Électrique » de mai–juin 2020.

Pour tenter de « garder dans le rythme » les étudiantes et les étudiants au fil des 5 semaines du cours, j’ai créé une série de questions d’entrainement (majoritairement des QCM) pour la plateforme Moodle. Les voici à présent sous forme d’archive à télécharger (texte + fichier directement importable dans Moodle), pour d’éventuel·le·s collègues intéressé·e·s.

Plus de détails sur le contenu et l’utilisation pédagogique sur la page dédiée.

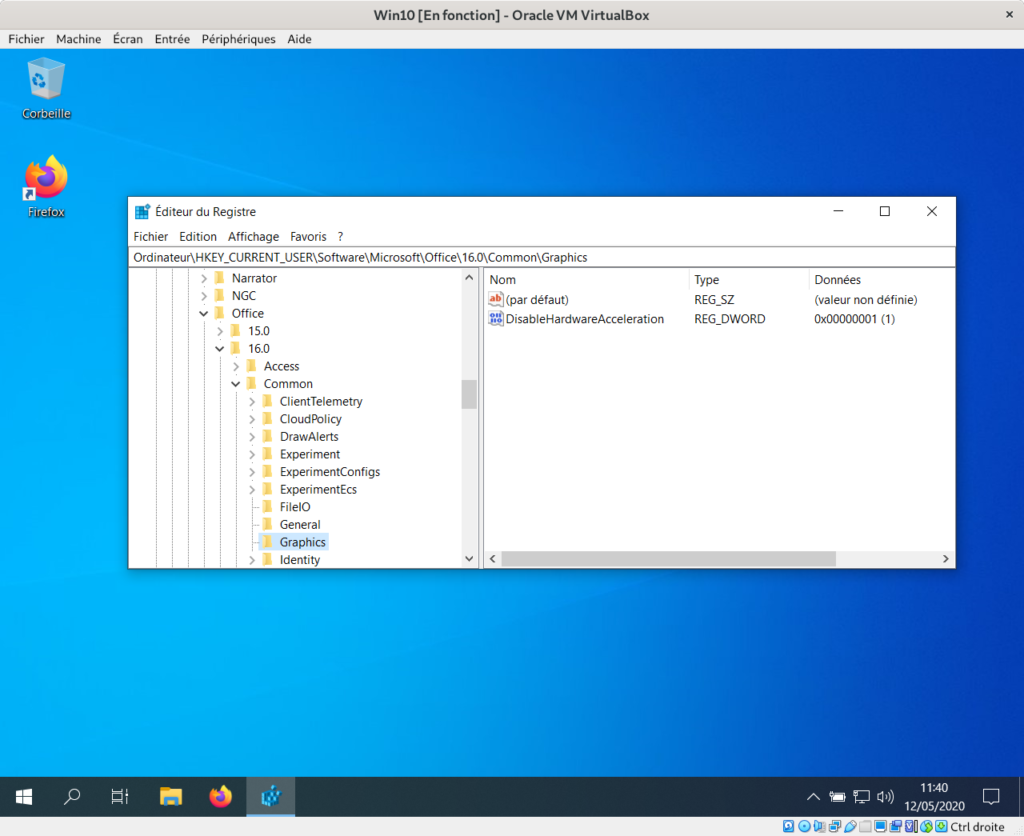

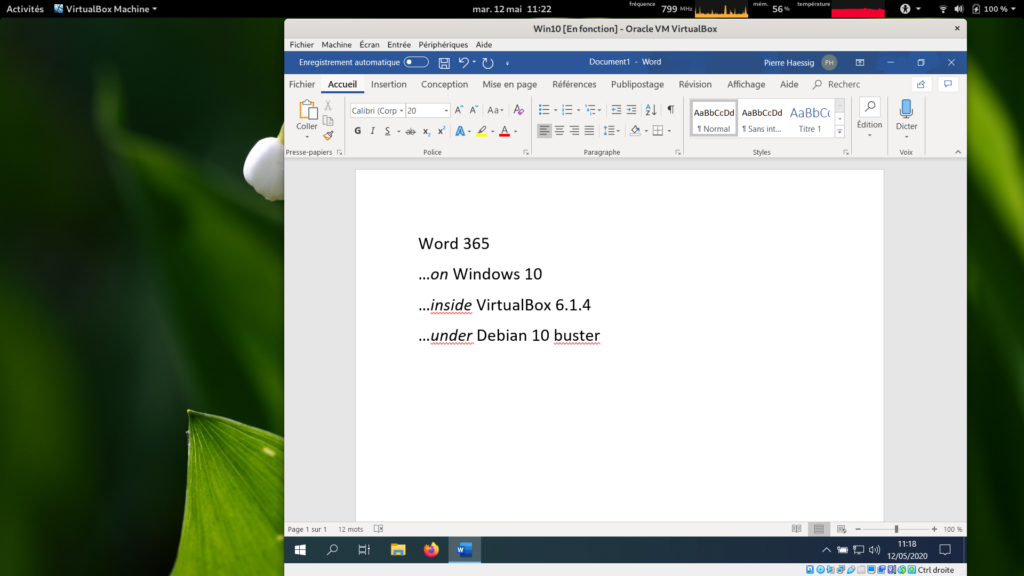

This is just a short note that may be useful to other people with a similar setup. I just installed a fresh Windows 10 virtual machine (VM) on my Linux system (Debian 10 “buster”, with VirtualBox 6.1.4 coming from Lucas Nussbaum unofficial VirtualBox repository).

One of the main objectives of this VM is to have Microsoft Office 365 for my work. Unfortunately, I faced a “blank window” issue which makes it totally unusable! First symptoms appeared around the end of the installation setup, but I didn't pay much attention since it still completed fine. However, at the first launch of Word (or PowerPoint or Excel), I got two blank windows: on the background a big white window which was probably Word, and on the foreground a smaller blank window, perhaps the license acceptation dialog, where I was probably supposed to press Yes if only I could see the button...

Now, I made some searches, and I did find some similar symptoms, but no adequate problem solutions. Only, I learned that the problem may come from Office use of hardware acceleration for display. Since I had previously activated the 3D hardware acceleration in the VM settings (it sounded like a good thing to activate), I tried to uncheck it, with no effect.

Then, it's possible to disable Office hardware acceleration, and this worked for me. However, among the three ways to do it I found, only the more complicated worked (regedit). Here are the three options:

In the end, only the arcane option 3 worked. As mentioned in the source, on my fresh Windows 10 setup, the subkey Graphics needs to be created inside HKEY_CURRENT_USER \Software \Microsoft \Office \16.0 \Common (the source says that version “16.0” is for Office 2016, but it seems Office 365 in 2020 is also version 16). And then create the DWORD value DisableHardwareAcceleration and set its value to 1.

After a reboot (is it necessary?), I could indeed run Word 365 properly and accept all the first run dialogs. It seems to run fine now. Phew!

These last weeks, I’ve read William S. Cleveland book “The Elements of Graphing Data”. I had heard it’s a classical essay on data visualization. Of course, on some aspects, the book shows its age (first published in 1985), for example in the seemingly exceptional use of color on graphs. Still, most ideas are still relevant and I enjoyed the reading. Some proposed tools have become rather common, like loess curves. Others, like the many charts he proposes to compare data distributions (beyond the common histogram), are not so widespread but nevertheless interesting.

One of the proposed tools I wanted to try is the (Cleveland) dot plot. It is advertised as a replacement of pie charts and (stacked) bar charts, but with a greater visualization power. Cleveland conducted scientific experiments to assess that superiority, but it’s not detailed in the book (perhaps it is in the Cleveland & McGill 1984 paper).

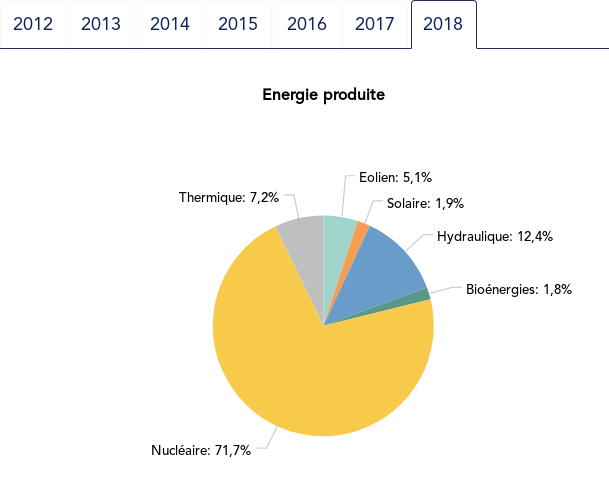

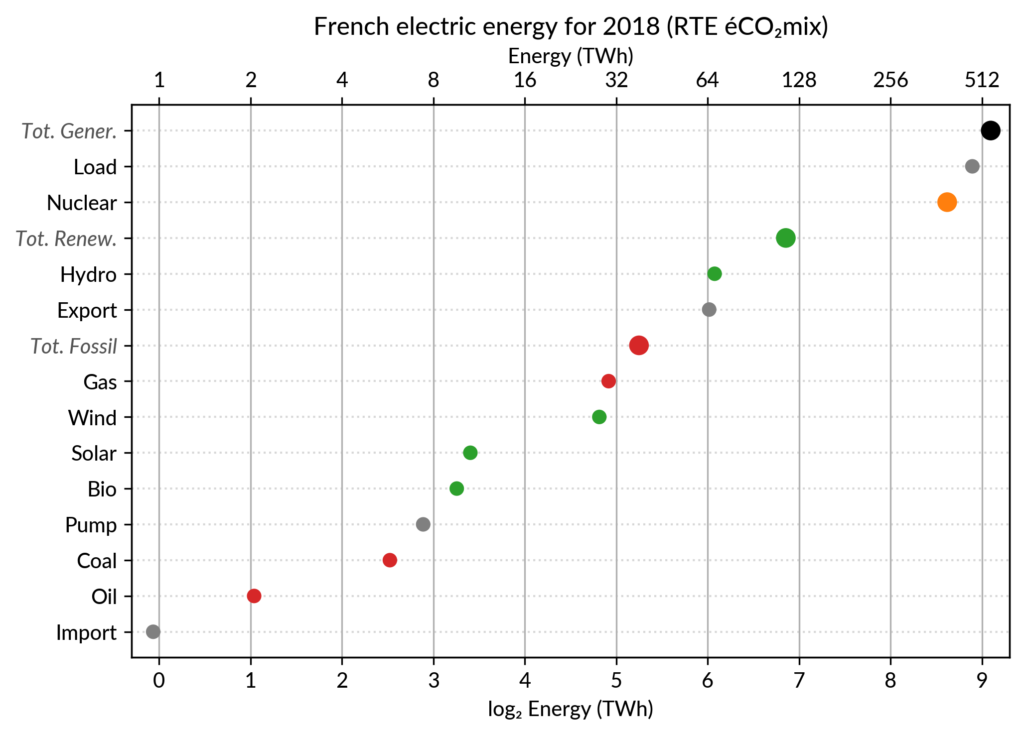

I’ve explored the visualization power of dot plots using the French electricity data from RTE éCO₂mix (RTE is the operator of the French transmission grid). I’ve aggregated the hourly data to get yearly statistics similar to RTE’s yearly statistical report on electricity (« Bilan Électrique »).

Such yearly energy data is typically represented with pie charts to show the share of each category of power plants. This is RTE’s pie chart for 2018 (from the Production chapter):

However, Cleveland claims that the dot plot alternative enables more efficient reading of single point values and also easier comparison of different points together. Here is the same data shown with a simple dot plot:

I’ve colored plant types by three general categories:

Subtotals for each category are included. Gray points are either not a production (load, exports, pumped hydro) or cannot be categorized (imports).

Compared to the pie chart, we benefit from the ability to read the absolute values rather than just the shares. However, how to read those shares?

This is where the log₂ scale, also promoted by Cleveland in his book, comes into play. It serves two goals. First, like any log scale, it avoids the unreadable clustering of points around zero when plotting values with different orders of magnitude. However, Cleveland specifically advocates log₂ rather than the more common log₁₀ when the difference in orders of magnitude is small (here less than 3, with 1 to 500 TWh) because it would yield a too small number of tick marks (1, 10, 100, 1000 here) and also because log₂ aids reading ratios of two values:

Still, I guess I’m not the only one unfamiliar with this scale, so I made myself a small conversion table:

| Δlog | ratio a/b (%) | ratio b/a |

|---|---|---|

| 0.5 | 71% (~2/3 to 3/4) | 1.4 |

| 1 | 50% (1/2) | 2 |

| 1.5 | 35% (~1/3) | 2.8 |

| 2 | 25% (1/4) | 4 |

| 3 | 12% | 8 |

| 4 | 6% | 16 |

| 5 | 3% | 32 |

| 6 | 1.5% | 64 |

As an example, Wind power (~28 TWh) is at distance 2 in log scale of the Renewable Total, so it is about 25%. Hydro is distant by less than 1, so ~60%, while Solar and Bioenergies are at about 3.5 so ~8% each.

Of course, the log scale blurs the precise value of large shares. In particular, Nuclear (distant by 0.5 to the total generation) can be read to be somewhere between 65% and 80% of the total, while the exact share is 71.2%. The pie chart may seem more precise since the Nuclear part is clearly slightly less than 3/4 of the disc. However, Cleveland warns us that the angles 90°, 180° and 270° are special easy-to-read anchor values whereas most other values are in fact difficult to read. For example, how would I estimate the share of Solar in the pie chart without the “1.9%” annotation? On the log₂ scaled dot plot, only a little bit of grid line counting is necessary to estimate the distance between Solar and Total Generation to be ~5.5, so indeed about 2% (with the help of the conversion table…).

Along with the pie chart, the other classical competitor to dot plots is the bar chart. It’s actually a stronger competitor since it avoids the pitfall of the poorly readable angles of the pie chart. I (with much help from Cleveland) see three arguments for favoring dots over bars.

The weakest one may be that dots create less visual clutter. However, I see a counter-argument that bars are more familiar to most viewers, so if it were only for this, I may still prefer using bars.

The second argument is that the length of the bars would be meaningless. This argument only applies when there is no absolute meaning for the common “root” of the bars. This is the case here with the log scale. It would also be the case with a linear scale if, for some reason, the zero is not included.

The third argument is an extension of the first one (better clarity) in the case when several data points for each category must be compared. Using bars there are two options:

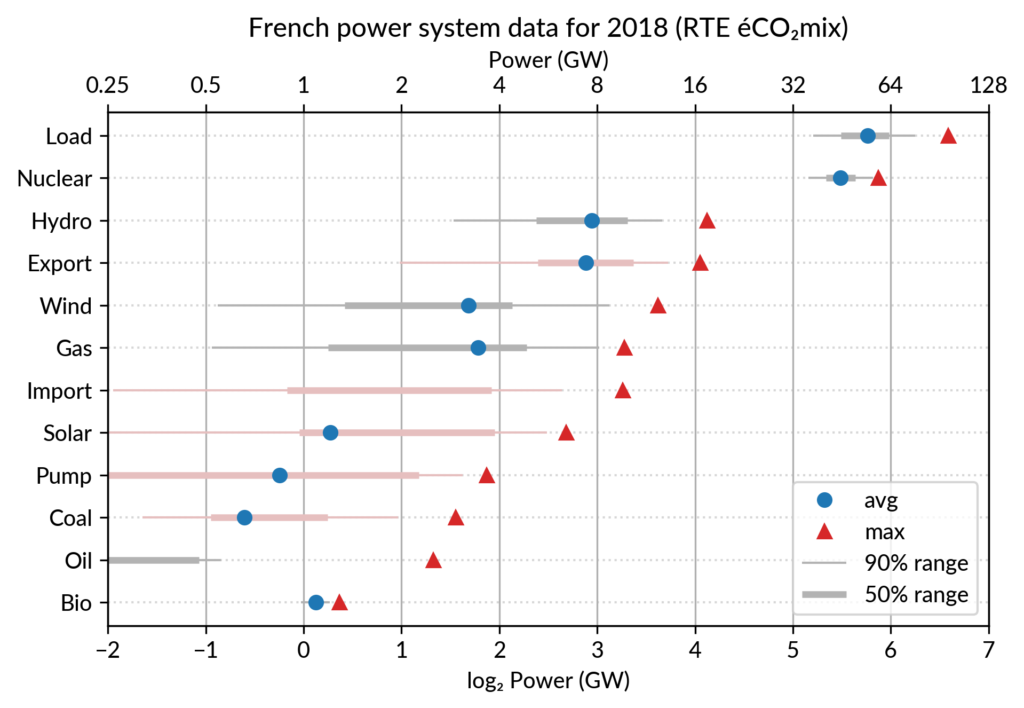

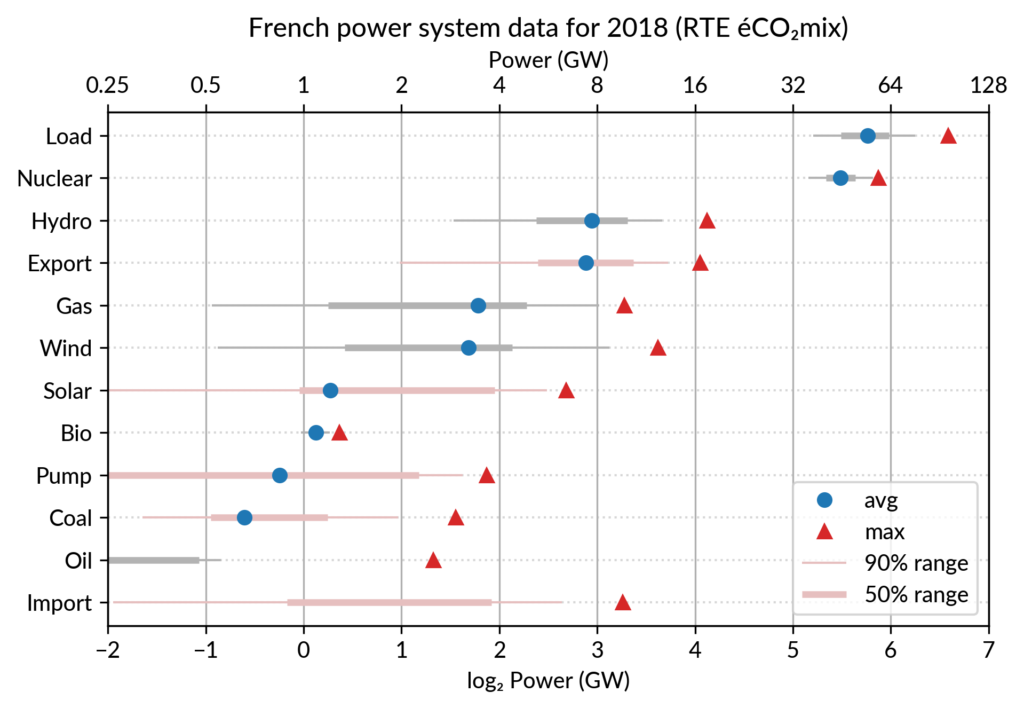

This brings me to the case where dot plots shine most: multiway dot plots.

The compactness of the “dot” plotting symbol (regardless of the actual shape: disc, square, triangle…) compared to bars allows superposing several data points for each category.

Cleveland presents multiway dot plots mostly by stacking horizontally several simple dot plots. However, now that digital media allows high quality colorful graphics, I think that superposition on a single plot is better in many cases.

For the electricity data, I plot for each plant category:

The maximum power is interesting as a proxy to the power capacity. The average power is simply the previously shown yearly energy production data, divided by the duration of the year. The benefit of using the average power is that it can be superimposed on the plot with the power capacity since it has the same unit. Also, the ratio of the two is the capacity factor of the plant category, which is a third interesting information.

Since it is recommended to sort the categories by value (to ease comparisons), there are two possible plots:

The two types of sorts are slightly different (e.g. switch of Wind and Gas) and I don’t know if one is preferable.

Since I felt the superposition of the max and average data was leaving enough space, I packed 4 more numbers by adding gray lines showing the 90% and 50% range of the power distribution over the year. With these lines, the chart starts looking like a box plot, albeit pretty non-standard.

However, I faced one issue with the quantiles: some plant categories are shut down (i.e. power ≤ 0) for a significant fraction of the year:

To avoid having several quantiles clustered at zero, I chose to compute them only for the running hours (when >0). To warn the viewer, I drew those peculiar quantiles in light red rather than gray. Spending a bit more time, it would be possible to stack on the right a second dot plot showing just the shutdown times to make this more understandable.

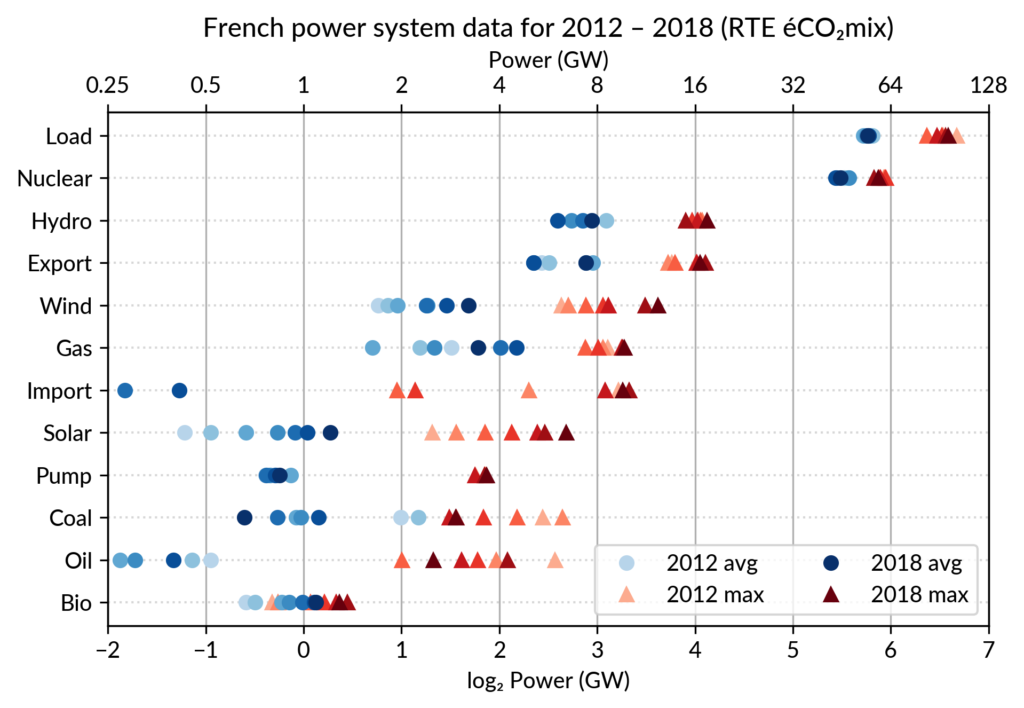

Again trying to pack more data on the same chart, I superimposed the statistics for several years. RTE’s data is available from 2012 to 2018 (2019 is still in the making…) and the year can be encoded by the lightness of the dots.

I think it is possible to perceive interesting information from this chart (like the rise of Solar and Wind along the drop of Coal), but it may be a bit too crowded. With only two or three years (e.g. 2012-2015- 2018), it is fine though.

A better alternative may be to use an animation. I tried two solutions:

To assemble a set of PNG images (Dotplot_2012.png to Dotplot_2018.png) into a GIF animation,

I used the following “incantation” of ImageMagick:

convert -delay 30 -loop 0 Dotplot_*.png Dotplot_anim.gifThe -delay 30 option sets a frame duration of 30/100 seconds, so about 3 images/s.

The result is nice but it is not possible to pause on a given year for a closer inspection. Using a video file format instead of GIF, pausing would be possible, but a convenient way to browse through the years would be much better.

For a true interactive plot, I’ve played with Altair, the Python package based on the Vega/Vega-Lite JavaScript libraries. It’s the second or third time I experiment with this library (all the other plots are made with Matplotlib). I find Altair appealing for its declarative programming interface and the fact it is based on a sound visualization grammar. For example, it is based on a well-defined notion of visual encoding channels: position, color, shape…

For the present task, I wanted to explore more particularly the declarative description of interactivity, a feature added in late 2017/early 2018 with the release of Vega-Lite 2.0/Altair 2.0.

Here is the result, illustrated by a screencast video before I get to know how to embed a Vega-Lite chart in WordPress:

To experience the interactivity, here is a standalone HTML page with the plot: Dotplot_Powersys_interactive.html

Specifying such a user interaction with a chart starts with the description of a selection object like this:

selector = alt.selection_single(

fields=['year'],

empty='none',

on='mouseover',

nearest=True,

init=alt.SelectionInitMapping(year=2018)

)Here fields=['year'] means that hovering one point will automatically select

all the data samples having the same year.

Then, the selection object is to be appended to one or several charts

(so that the selection works seamlessly across charts).

This is no more than calling .add_selection(selector) on each chart.

Finally, the selection is used to conditionally set the color of plotting marks, or whatever visual encoding channel we may want to modify (size, opacity…). A condition takes a reference to the selection and two values: one for the selected case, the second when unselected. Here is, for example, the complete specification of the bottom chart which serves as a year selector:

years = base.mark_point(filled=True, size=100).encode(

x='year:O',

color=alt.condition(selector,

alt.value('green'),

alt.value('lightgray')),

).add_selection(selector)The Vega-Lite compiler takes care of setting up all the input handling logic to make the interaction happen. A few days ago, I happen to read a Matlab blog post on creating a linked selection which operates across two scatter plots. As written in that post, “there’s a bit of setup required to link charts like this, but it really isn’t hard once you’ve learned the tricks”. This highlights that the back-office work of the Vega-Lite compiler is really admirable. Notice that doing it in Python with Matplotlib would be equally verbose, because it is not a matter of programming language but of imperative versus declarative plotting libraries.

Here are the few other pages I found on Cleveland’s dot plots, one with Tableau and one with R/ggplot:

I created the plots using RTE éCO₂mix hourly records to generate the yearly statistics. For a unknown reason, when I sum the powers over the year 2018, I get slightly different values compared to RTE’s official 2018 statistical report on electricity (« Bilan Électrique 2018 »). For example: Load 478 TWh vs 475.5 TWh, Wind 27.8 TWh vs 28.1 TWh… I don’t like having such unexplained differences, but at least they are small enough to be almost invisible in the plots.

All the Python code which generates the plots presented here can be found in the Dotplots_Powersys.ipynb Jupyter notebook, within my french-elec2 repository.